As you may have already read in this morning’s EMC Data Domain and SourceOne blog, EMC is taking data protection to the next level, and to that point, the version 7 release of EMC SourceOne is now available. SourceOne 7 represents the next generation archiving platform for email, file systems and Microsoft SharePoint content and includes SourceOne Discovery Manager 7, which together, enhance an organization’s ability to protect and discover their data. In fact, in ESG’s recent “Data Protection Matters” video featuring Steve Duplessie, ESG’s founder and Senior Analyst, it was stated that “backup and archive are different but complimentary functions” that both are “key pillars of a data protection strategy”, and that “backup without archive is incomplete”. I find this to be in perfect alignment with EMC’s strategy for data protection, and with this in mind, I’d like to review some of the great features of EMC SourceOne 7.

Let’s take File System data as an example. Have you ever needed to locate file system data in your infrastructure without a purpose built archive to assist? Perhaps searching data for business reuse, an eDiscovery request, an audit or investigation? How did that work for you? Often, it’s a time consuming exercise in futility, or at best, an incomplete exercise with non-defensible results. Well, with the latest release of SourceOne for File Systems, a quick search can produce an accurate result set of all files that meet your search criteria AND you never had to physically archive that content. That’s because this release offers “Index in Place” which enables organizations to index the terabytes (or petabytes!) of data that exists “in the wild” without having to move that data to the archive. How cool is that? Users and applications continue to transparently access that data as needed, yet sitting on top is a layer of corporate compliance. Now you can apply retention and disposition policies to this data, discover information when required and place only the data that needs to be put on “legal hold” into the physical archive.

SourceOne 7 uniquely addresses each form of content. Since our next gen archiving family was built from platform level up, all content types are managed cohesively, yet each type of content is archived in such a way that compliments the content itself. For instance, archiving MS SharePoint you can:

- Externalize active data to:

- Save on licensing and storage costs

- Increase SharePoint’s performance

- Provide transparent access to the content

- Archive inactive content to:

- Further decrease storage and licensing requirements

- Make data available for eDiscovery and compliance

- Set consistent retention and disposition policies

- Provide users with easy search and recall from the MS SharePoint Interface

When it comes to the IT administrator, there are plenty of advantages to SourceOne, as well. Our entire archive is managed from a single console; all email, MS SharePoint, and File System data is captured into one archive that eases administrative management burden and decreases the margin of error when creating and executing policies against all types of content. The IT admin can also monitor and manage the overall health of the archive server using their existing monitoring tools, such as MS SCOM. Improved ROI of monitoring tools, marginal learning curve, and IT efficiency are all part of SourceOne 7. And of course, for the IT admin there’s the comfort in knowing that the data is being protected while transparently available to end user.

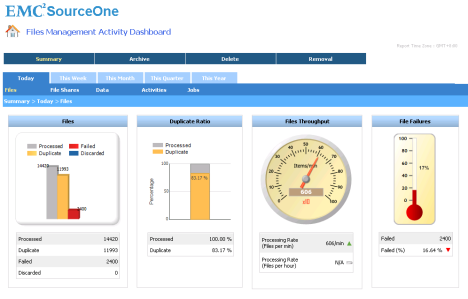

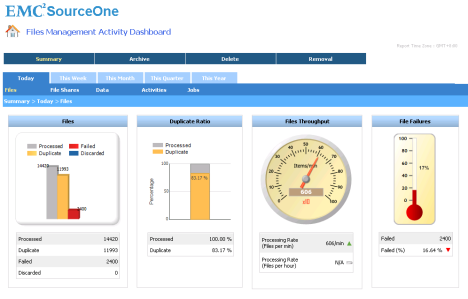

The shift in IT infrastructure certainly encompasses virtualization, and most organizations take advantage of our ability to virtualize SourceOne. This next gen architecture allows the “snap on” of worker servers (either virtually or physically) with no disruption to the processes running on the existing archive servers, allowing for expansion and contraction of servers and services as necessary. And, with all the new auditing and reporting capabilities in SourceOne 7, it’s a breeze determining when you may need to consider either adding or subtracting servers/virtual machines to handle the workload, to examine trends, and to ensure compliance.

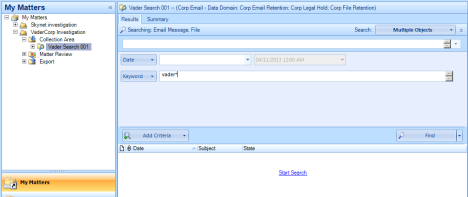

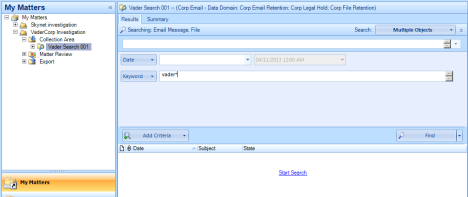

Every good archive deserves its own discovery tool, and with SourceOne Discovery Manager 7, you’ll find just that, an easy to use, intuitive interface that allows for discovery of all email, SharePoint and File System content within the archive.

With Discovery Manager you can:

- Collect archived data (even that “indexed in place” data) to be managed as part of a matter

- Place into hold folders

- Perform further review, culling, and tagging

- Export to industry standard formant such as EDRM XML 1.0/1.2 and others

Data protection based on growth and recovery requirements are changing and “one size recovery fits all” is no longer a viable option – to address all the data protection challenges takes a holistic approach to managing this business critical information. EMC solutions which include SourceOne 7 for archiving and eDiscovery, in conjunction with our best of breed backup and hardware platforms, make this happen. On that note, please make sure to read about the new Data Domain 5.3 capabilities for backup and archive, in their supporting blog, here. To find more information on EMC SourceOne, please visit our EMC.COM SourceOne Family and Archiving websites.

Filed under: Uncategorized | Tagged: archive, archiving, data protection, discovery, EMC, SourceOne | Leave a comment »